Overview

Our method allows learning continuous semantic controls from simple annotations in symbolic music generation. In this page, we show additional experimental results for 12 attributes from jSymbolic along with audio samples and feature descriptions from the official webpage. Implementation code is available here.

Single attribute

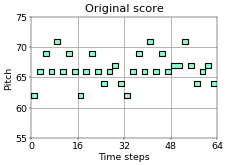

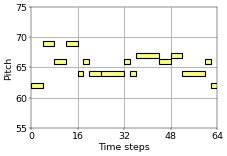

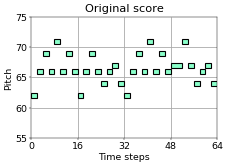

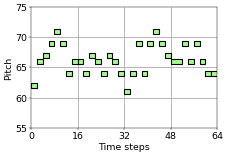

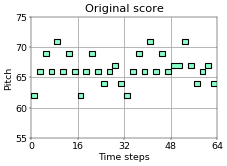

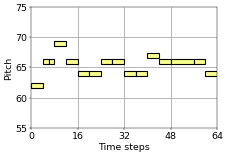

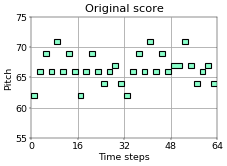

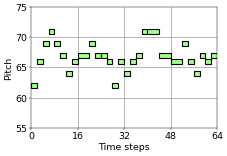

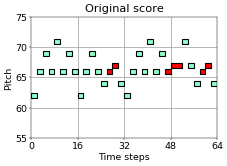

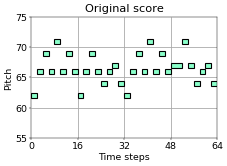

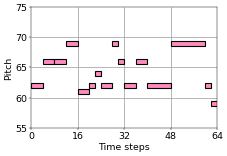

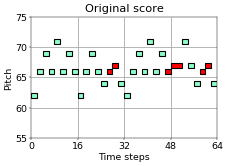

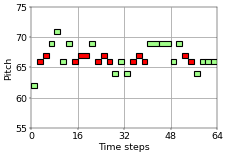

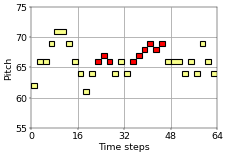

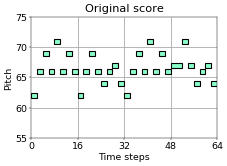

In this setting, only one attribute is trained at the same time. The left score is the original data in each attribute.

Total number of notes

The total number of notes, including both pitched and unpitched notes.

| →Reduce total number of notes→ | ||

|---|---|---|

|

|

|

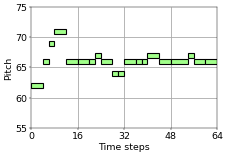

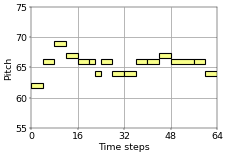

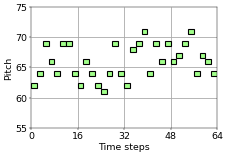

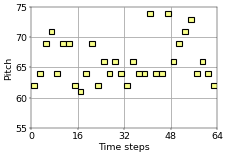

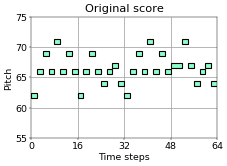

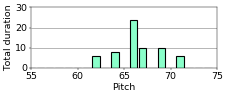

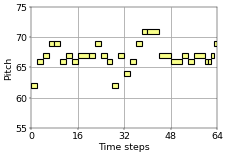

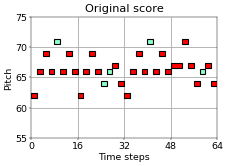

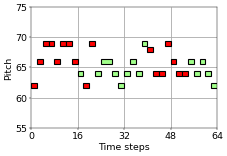

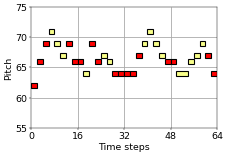

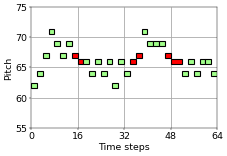

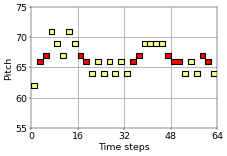

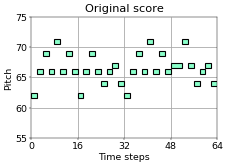

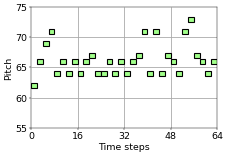

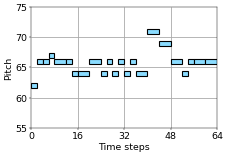

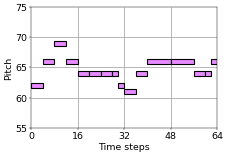

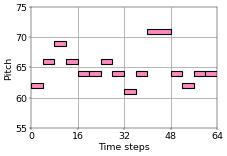

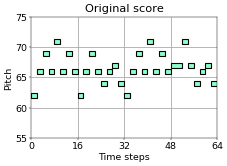

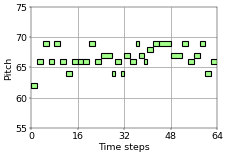

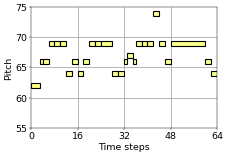

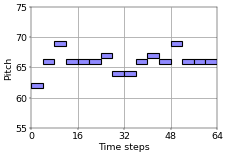

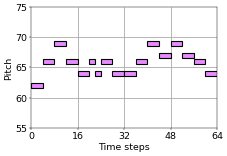

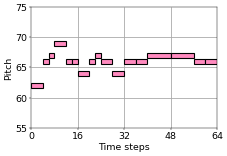

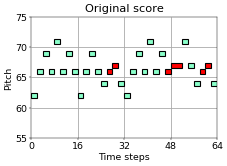

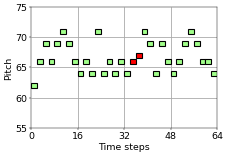

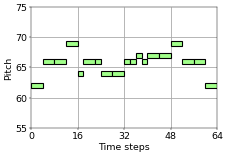

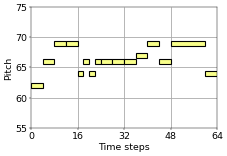

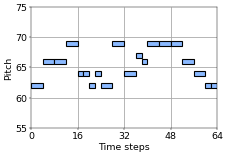

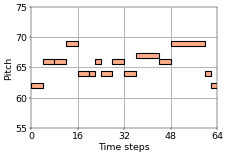

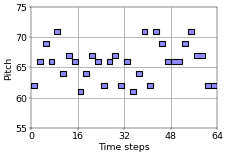

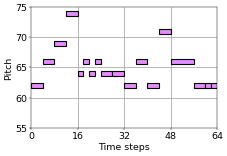

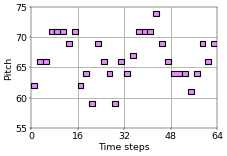

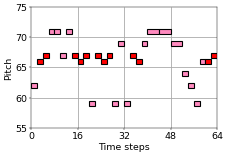

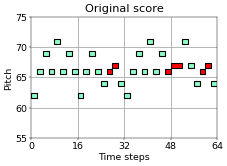

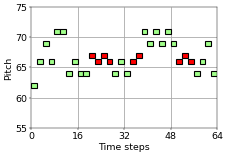

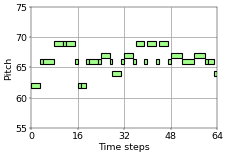

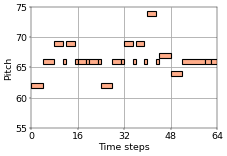

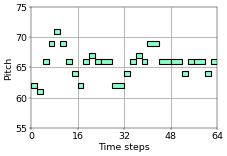

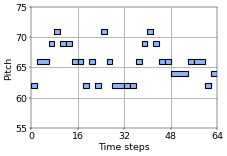

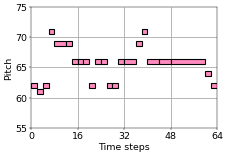

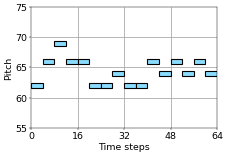

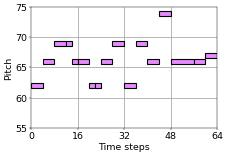

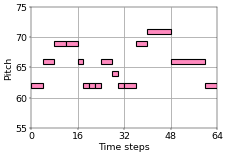

Pitch variability

The standard deviation of the MIDI pitches of all pitched notes in the piece. It provides a measure of how close the pitches as a whole are to the mean pitch.

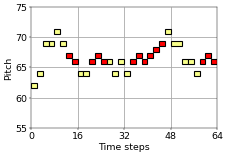

| →Increase pitch variability→ | ||

|---|---|---|

|

|

|

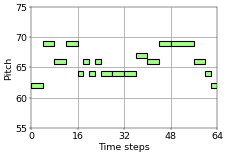

Rhythmic value variability

The standard deviation of the note durations in quarter notes of all notes in the music. It provides a measure of how close the rhythmic values are to the mean rhythmic value. Its calculation includes both pitched and unpitched notes, is calculated after rhythmic quantization, is not influenced by tempo, and is calculated without regard to the dynamics, voice, or instrument of any given note.

The model can increase the variety of the note durations according to the attribute control.

| →Increase rhythmic value variability→ | ||

|---|---|---|

|

|

|

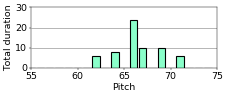

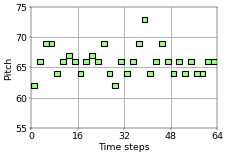

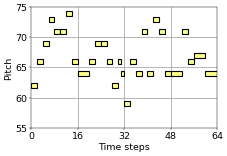

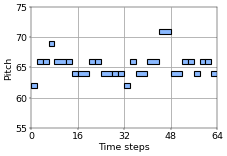

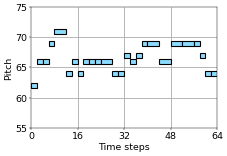

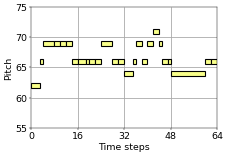

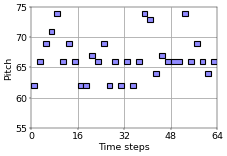

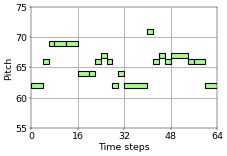

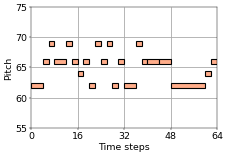

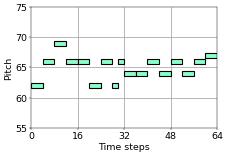

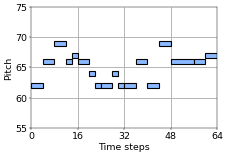

Pitch kurtosis

The kurtosis of the MIDI pitches of all pitched notes in the piece. It provides a measure of how peaked or flat the pitch distribution is. The higher the kurtosis, the more the pitches are clustered near the mean and the fewer outliers there are.

The results show that the model can decrease the pitch kurtosis successfully in this example.

| →Decrease pitch kurtosis→ | ||

|---|---|---|

|

|

|

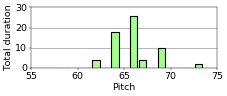

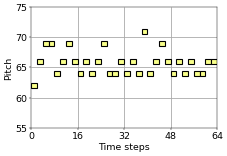

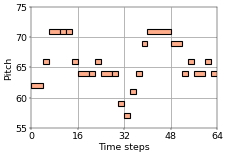

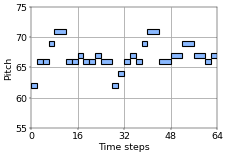

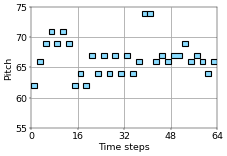

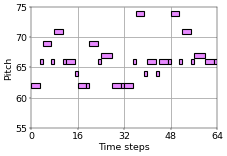

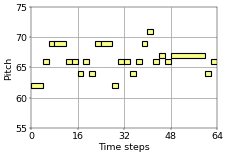

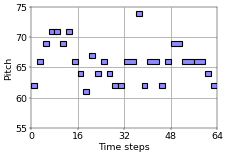

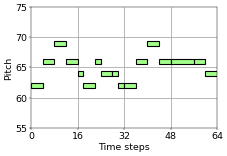

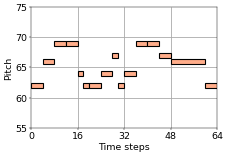

Pitch skewness

The skewness of the MIDI pitches of all pitched notes in the piece. It provides a measure of how asymmetrical the pitch distribution is to either the left or the right of the mean pitch. A value of zero indicates no skew.

As we showed the results in the paper, this attribute is relatively difficult to train. In this example, the pitch skewness of the results decreases although we increase the values of the attribute condition.

| →Increase pitch skewness→ | ||

|---|---|---|

|

|

|

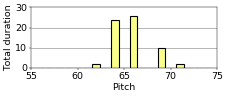

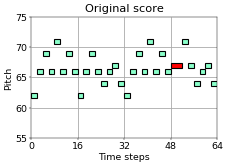

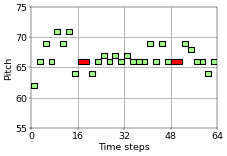

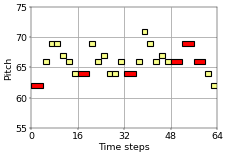

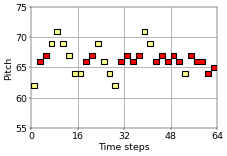

Prevalence of most common rhythmic value

The fraction of all notes that have a rhythmic value corresponding to the most common rhythmic value. Its calculation includes both pitched and unpitched notes, is calculated after rhythmic quantization, is not influenced by tempo, and is calculated without regard to the dynamics, voice, or instrument of any given note.

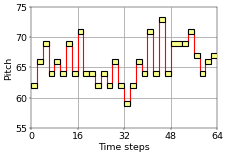

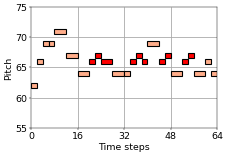

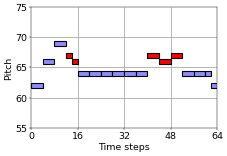

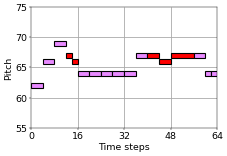

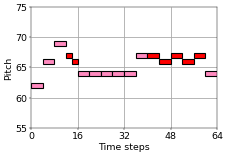

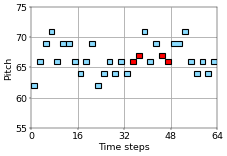

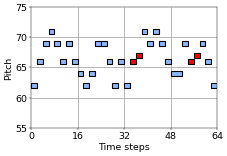

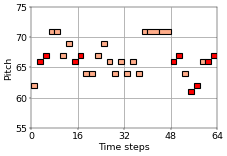

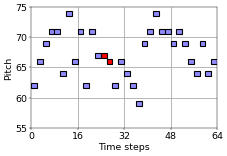

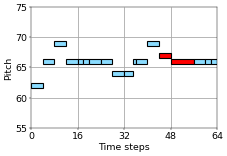

The most common rhythmic value is the 8th note in this example. The notes that are NOT 8th notes are shown in red. The model can decrease the prevalence of 8th notes according to the control.

| →Decrease the prevalence of 8th notes→ | ||

|---|---|---|

|

|

|

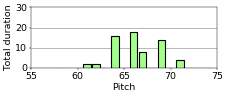

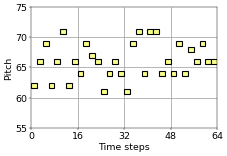

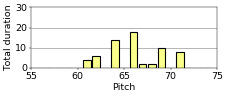

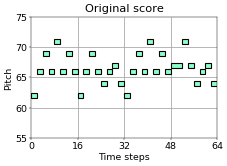

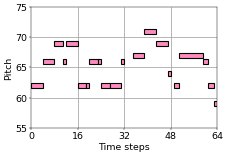

Average note duration

The average duration of notes (in seconds).

| →Increase average note duration→ | ||

|---|---|---|

|

|

|

Note density variability

How much the note density (average number of notes per second) varies throughout the piece. It takes into account all notes in all voices, including both pitched and unpitched notes. To calculate this value, the piece is broken into windows of 5-second duration, and the note density of each window is calculated. The final value of this feature is then found by calculating the standard deviation of the note densities of these windows. It is set to 0 if there is insufficient music for more than one window.

| →Increase note density variability→ | ||

|---|---|---|

|

|

|

Amount of arpeggiation

The fraction of melodic intervals that are repeated notes, minor thirds, major thirds, perfect fifths, minor sevenths, major sevenths, octaves, minor tenths, or major tenths. This is only a very approximate measure of the amount of arpeggiation in the music.

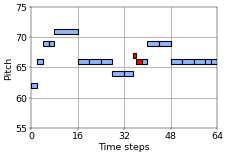

The successive notes whose interval corresponds to the definition of “arpeggiation” are shown in red. The results show that the model can control this attribute appropriately.

| →Decrease amount of arpeggiation→ | ||

|---|---|---|

|

|

|

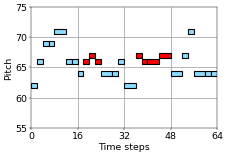

Chromatic motion

The fraction of melodic intervals that correspond to a semitone.

Successive notes whose interval is a semitone, which corresponds to chromatic motion, are shown in red.

| →Increase chromatic motion→ | ||

|---|---|---|

|

|

|

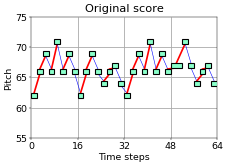

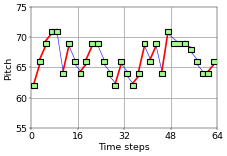

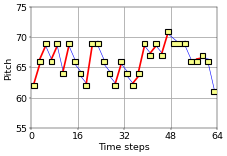

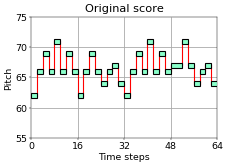

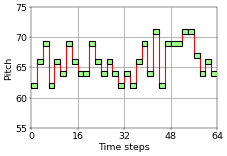

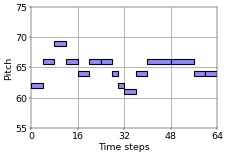

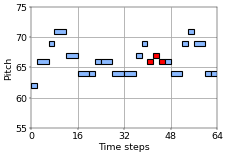

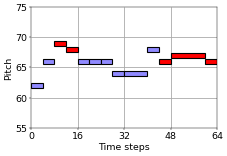

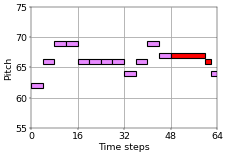

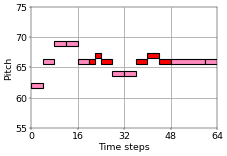

Direction of melodic motion

The fraction of melodic intervals that are rising in pitch. It is set to zero if no rising or falling melodic intervals are found.

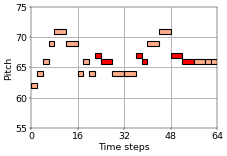

The rising-pitch intervals are shown in red and the others are in blue. The results indicate that the model successfully changes the ratio of the direction of melodic motion.

| →Decrease the rising-pitch intervals→ | ||

|---|---|---|

|

|

|

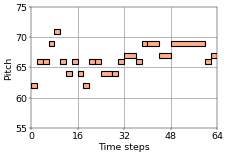

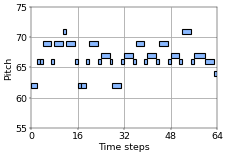

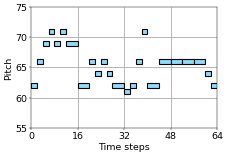

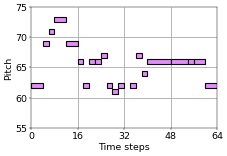

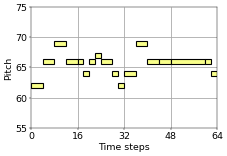

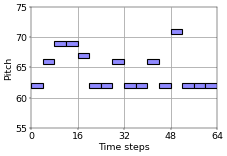

Average interval spanned by melodic arcs

The average melodic interval (in semitones) separating the top note of melodic peaks and the bottom note of adjacent melodic troughs.

| →Increase melodic arcs interval span→ | ||

|---|---|---|

|

|

|

Double attributes

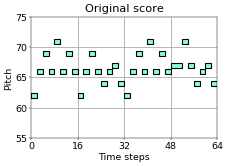

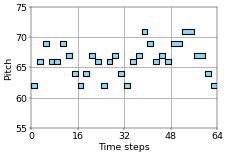

In this section, two attributes are trained at the same time. The original data is shown at the top-left in each setting.

Total number of notes & pitch variability

The model can control the total number of notes and pitch variability at the same time.

| ↓Reduce total number of notes↓ | →Increase pitch variability→ | |

|---|---|---|

|

|

|

|

|

|

|

|

|

Total number of notes & rhythmic value variability

When the model tries to increase rhythmic value variability while keeping the total number of notes, it adds longer and shorter notes at the same time to use a larger variety of note lengths.

| ↓Reduce total number of notes↓ | →Increase rhythmic value variability→ | |

|---|---|---|

|

|

|

|

|

|

|

|

|

Total number of notes & chromatic motion

Successive notes whose interval is a semitone, which corresponds to chromatic motion, are shown in red. Compared to the total number of notes or pitch variability, chromatic motion is difficult to train, but the model can control this attribute mostly.

| ↓Reduce total number of notes↓ | →Increase chromatic motion→ | |

|---|---|---|

|

|

|

|

|

|

|

|

|

Pitch variability & rhythmic value variability

The model can control pitch variability and rhythmic value variability at the same time.

| ↓Increase pitch variability↓ | →Increase rhythmic value variability→ | |

|---|---|---|

|

|

|

|

|

|

|

|

|

Pitch variability & chromatic motion

Successive notes whose interval is a semitone, which corresponds to chromatic motion, are shown in red.

| ↓Increase pitch variability↓ | →Increase chromatic motion→ | |

|---|---|---|

|

|

|

|

|

|

|

|

|

Rhythmic value variability & chromatic motion

Successive notes whose interval is a semitone, which corresponds to chromatic motion, are shown in red.

| ↓Increase rhythmic value variability↓ | →Increase chromatic motion→ | |

|---|---|---|

|

|

|

|

|

|

|

|

|

Triple attributes

In this section, three attributes are trained at the same time.

Total number of notes, pitch variability, and rhythmic value variability

Our proposed model can control three attributes independently.

| Default total number of notes | ||

|---|---|---|

| ↓Increase pitch variability↓ | →Increase rhythmic value variability→ | |

|

|

|

|

|

|

|

|

|

| Less total number of notes | ||

| ↓Increase pitch variability↓ | →Increase rhythmic value variability→ | |

|

|

|

|

|

|

|

|

|

| Much less total number of notes | ||

| ↓Increase pitch variability↓ | →Increase rhythmic value variability→ | |

|

|

|

|

|

|

|

|

|